Tech Corner August 24, 2023

Model choice: An open architecture strategy for using LLMs

At Alkymi, our customers have strict data privacy policies and enterprise architecture requirements they need to adhere to, particularly when handling data on behalf of their own clients. Starting to use large language models (LLMs) in their workflows is an exciting opportunity, but it’s one they have to think through carefully and deliberately.

In order to add generative AI into their workflows with confidence, a one-size-fits-all approach is not the answer, whether because of data security and architecture considerations or due to specific use cases. Adopting an LLM-powered platform that is tied to a single LLM provider or strategy is a potentially limiting decision. That's why we believe it's critically important to give our customers the power to decide which models they use, and we can use our experience to guide them through that decision.

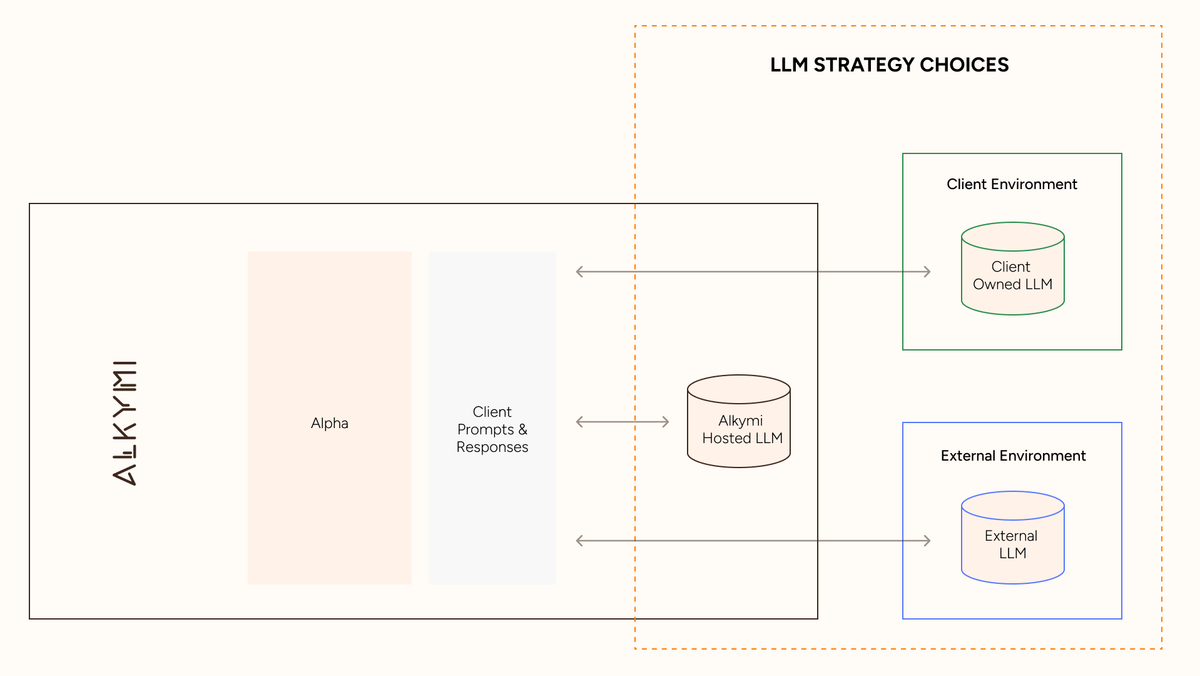

When using Alpha, our customers can decide between three LLM strategies:

- Alkymi-hosted LLMs:

These models are 100% securely hosted within the Alkymi cloud environment (or the customer’s Private Cloud) and can function autonomously without external dependencies. When using an Alkymi-hosted model, such as Mistral or Meta's open source Llama 3 model hosted by Alkymi, customer data remains fully contained within the Alkymi hosting infrastructure.

This option gives customers with strict security and data governance mandates the opportunity to leverage LLMs in their workflows without having to consider how their data is being leveraged by third-party APIs. - Externally-hosted LLMs:

Alkymi customers can use Alpha with third-party LLMs, such as GPT-4, which is integrated with the Alkymi platform through Microsoft Azure’s enterprise OpenAI API. Utilizing only vetted, enterprise-grade APIs ensures that our (and our customers’) data privacy and architectural standards are met. Any customer data sent and received through the API is secure, encrypted (both at rest and in transit), isolated, and not used for model training.

Alkymi users can choose from other third-party models that meet our baseline performance, security, and licensing criteria, enabling them access to the best externally-hosted LLMs within a secure environment. These LLMs, such as Azure OpenAI’s, are a powerful option for those looking to strike the balance between performance and data privacy. - Enterprise-hosted LLMs:

Many firms have active initiatives to create their own LLMs for specific use cases but are met with the challenge of then deploying them into their workflows in a way that allows business users to effectively adopt and engage with them long-term. Alkymi customers have the option to leverage their own LLMs, built and maintained within their own organizations, alongside the workflow, data validation, and integration capabilities of the Alkymi platform, via API.

This is the right option for users who have an in-house data science team or larger corporate LLM strategy and are either already utilizing their own LLMs or are developing LLMs in-house.

When integrating any new tool into their workflows, it’s as crucial for financial services firms to know that they’re leveraging best-in-class technology as it is for them to know exactly how their data and their customer data is being used. We want our customers to be not just informed but actively involved in that decision. Alkymi users can opt into any of these LLM strategies simultaneously within the platform—selecting the right LLM for each of their specific workflows.

One example of how Alkymi users are able to exercise choice is shown by our Answer Tool, available in Alkymi Alpha. Each time they add a new Answer Tool to their workflow, users can choose which model they would like to use as the answer-engine for their question from a drop-down menu.

Large language models can generate insights quickly and with amazing accuracy, providing concise answers from large documents and data sets. As LLMs continue to make rapid advancements, it will be critical for enterprises to be able to chart their own path. With new models with different performance and data privacy characteristics being deployed, maintaining an ability to quickly adopt and interchange models that meet different needs is crucial.

We’re making this choice possible at Alkymi by providing our customers an understanding of the models available and the option to choose the ones best tailored to their needs, so they can easily use LLMs in their workflows with confidence.

More from the blog

Introducing Alkymi Private Credit

by Harald ColletAlkymi Launches Alkymi Private Credit, AI-Powered Private Credit Solution to Automate Complex Loan Data Workflows

Alkymi Supports Groq and GroqCloud for Secure, Scalable AI in Private Markets

by Steven SheAlkymi pairs Groq’s high-performance AI infrastructure with governed private-markets data to enable secure, enterprise-grade AI.

2025: A Pivotal Year for AI and Private Markets

by Maria OrlovaA 2025 review of Alkymi’s work helping private markets institutions replace manual data workflows with trusted, AI-ready infrastructure.